A two-day symposium entitled ‘(Un)Stable Diffusions’ on AI’s publics, publicities, and publicizations was organized by Machine Agencies in collaboration with the Applied AI Institute, Concordia University (May 23-24). The symposium took place in a hybrid format and saw scholars, practitioners, and students from across the world discuss generative artificial intelligence (AI), machine learning, ChatGPT, and a number of other topics that seem to be dominating our dinner table conversations at the moment. As such, attendees took part in two keynote panels entitled ‘AI’s (Un)Stable Diffusions?’ and ‘Shaping AI’.

Keynote Panel: AI’s (Un)Stable Diffusions?

The first keynote panel entitled ‘AI’s (Un)Stable Diffusions’ was conducted in a hybrid format with four leading theorists working on STS and AI. In response to COVID-19, Dr. Marion Fourcade, professor of sociology and director of Social Science Matrix at UC Berkeley, spoke about how AI-based systems are becoming increasingly prevalent as more and more of us migrate to online and digital learning practices. She discussed how apps and programs have become an integral part of the teaching system, bringing not only students but also parents into the pedagogical process. As a result, a lot of teaching apps have been built with activities for parents to make sure they are actively involved in their children’s learning and classroom activities. By using apps and programs that encourage and promote active participation in the classroom, parents who weren’t as attentive to their children’s progress can be involved. As innovative as it may seem to track parents’ involvement in their children’s classroom activities, the migration of education activities online has also forced parents to subscribe to a multitude of apps and online services that collect their personal information, which creates the issue of commodification. “In other words, what used to be essentially free has suddenly become a commodity that parents must pay for in order to actively participate in their children’s classroom activities,” noted the associate professor who is currently a fellow at the Max Planck-Sciences Po Center for Coping with Market Instability.

The second panelist, Dr. Beth Coleman, Associate Professor of Data and Cities at the Institute of Communication, Culture, Information, & Technology and Faculty of Information at the University of Toronto discussed the limitations of generative AI and cautioned us that while we should look forward to AI’s promise, we should also make sure there are limits to its use, context, and extent. In her presentation, she provided alternatives to generative AI and talked about hallucination versus disinformation, anthropomorphic versus mirroring, open/closed versus accountability, and responsibility versus regulation/consent. In her view, the conversation about generative AI must shift away from a binary view of open versus closed and focus instead on framing it in an accountable and transparent manner. In light of the fact that algorithmic harms disproportionately affect the lives of poor, marginalized, and women, it’s time to address what she called “the engineering problem” by critically examining “what was put into it to get what out of it.” In highlighting the ways in which results from algorithms are frustratingly predictive and categorical, the director of the City as Platform Lab asked “what is it (AI) even talking about?”. A major problem with algorithmic systems is that they categorize people without allowing them meaningful access to be seen or heard. As Dr. Lucy Suchman emphasized, it is often impossible to know how a system interprets you – a black box that AI promoters are intent on keeping inaccessible. She argued that there is nothing inevitably ‘self-evolving’ about AI, as promoters of AI have been claiming over the years. This also links to Dr. Coleman’s point that the way AI functions is generated on pre-existing data in a very linear, structured, and coded way that is susceptible to making algorithms harm, with repercussions that range from unsustainable extraction of natural resources to maintenance of status quo by labour exploitation to mindless consumerist culture. In other words, the production of AI systems accelerates the extraction of some minerals and with more AI systems in operation, there is an augmentation of algorithmic harms all around.

The ways algorithms are encoded, produced, and reproduced segued into Dr. Mona Sloane’s work: a sociologist working on design and inequality, specifically in the context of AI design and policy. She is an Assistant Professor at NYU’s Tandon School of Engineering, a Senior Research Scientist at the NYU Center for Responsible AI, and a Fellow with NYU’s Institute for Public Knowledge (IPK) and The GovLab. Today, many companies use AI systems for employee recruitment. Furthermore, she also pointed out how companies have resorted to algorithmic systems and Boolean search based on AND, OR, and NOT in recruiting human resources. As more and more companies are relying on the search for the right employee for the job, it is very likely that people’s linkage to employment would be one based on automation and algorithmic systems rather than a human face-to-face interview. Dr. Sloane also added that the impact of COVID has given rise to more such usage of AI-driven access to the labour market. This very act of digital recruitment based on Boolean search ultimately suggests that one’s successful entry into the labor market comes at the price of being reduced to rather a manipulation of operators. Simply put, the use of algorithmic recruiting does not only overemphasize search engines but also devalues more social and emotional skills that are key to a sustainable labor market.

Finally, Dr. Lucy Suchman’s presentation focused on the increasing use of AI and the use of algorithmic systems in the targeting of persons by powerful countries such as the USA. A Professor Emerita of the Anthropology of Science and Technology at Lancaster University in the UK, she presented the unequal and often illogical ways in which powerful countries have been exerting their power against poorer countries by use of air and digital surveillance and using arms to keep peace and order and to fight against anything that is considered ‘imminent threat’ by the AI system. Her presentation brought into light the ways in which situation-based or location-based algorithmic systems have become a means by which to wage wars against anything that is deemed a threat based on AI data.

Indeed, in light of such harmful and illegitimate practices, the governance of AI has become a concern of ethical responsibility. In her view, as narratives around AI have been constructed as an ‘autonomously evolving thing’, making people believe that we cannot possibly know the big algorithmic systems, and as a result AI processes are obscured and not held accountable. Dr. Suchman highlighted that such narratives ‘primarily serve the interests of AI promoters’.

However, she highlights that the history of AI shows that there is nothing inevitable about technological developments.

Hence, as she rightly pointed out the problem lies in co-opting the ‘signal intelligence’ for ‘human intelligence’ for the targeting of aerial strikes, in areas of US operations in Afghanistan and Iraq, and in the areas of undeclared war fighting in Yemen, Somalia and Pakistan. To this end, to what extent do we understand the AI generative system in its networked system? Dr. Suchman questioned situational awareness based on pre-existing reality derived from generative AI systems, algorithmic sequences, and data – all of which ultimately hinge on pre-existing data. For the most part, this data is mostly obscured, partial, and discriminatory to some and not others. It is unfortunate that our society functions at the whims of a process that renders data into an ‘actionable problem’. The promise of ‘algorithmic warfare’ incorporating computer visions in the name of defense by the US and its allies has become a truly imminent threat to others which dehumanizes military practice as a form of modern warfighting. The danger then lies in irresponsibly treating anywhere as an ‘imminent threat’ based on data which then becomes a ground for aerial strikes in the name of defense by the US and its allies.

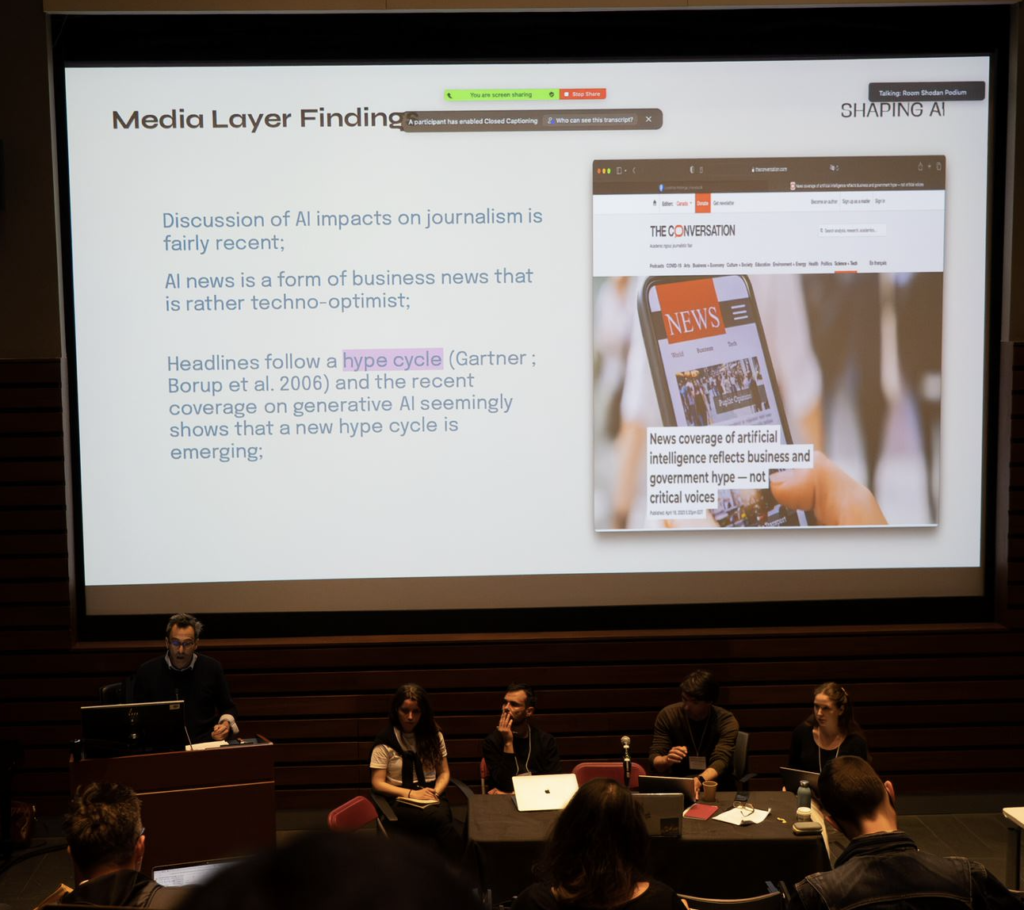

Second keynote panel: Shaping AI

The keynote panel on the second day saw contributions from research teams from France, Canada, Germany and the UK as part of the ‘Shaping AI’ (2021-2024) research project which is a multinational collaboration between Humboldt Institute of Internet and Society, Berlin, the medialab at Sciences Po, Paris, the Centre for Interdisciplinary Methods at the University of Warwick, the NENIC Lab at INRS Montreal, and the Algorithmic Media Observatory at Concordia University. In relation to AI’s “deep learning revolution” in a span of a decade (2012-2021), they interrogate the shaping of AI and critical engagements with AI’s media representations, policy framings, and scientific debates. Crucially, these were also epistemic reflections on how they were shaping AI, including topics of controversiality of AI in recent times. A major part of the discussion highlighted the speculation and controversies of AI and why perhaps they are necessary to further make speculative inquiries into the AI system.

Team Canada highlighted that AI news is treated as a form of business news and very techno-optimist – potentially obscuring the limits and dangers of AI. Hence, they emphasized the need to have critical media coverage of the political economy of AI in the current media landscape. A deeper problematization of controversies surrounding AI use in Canada will also contribute to critical voice, the team argued.

Team Germany argued that a holistic understanding of mapping the AI controversy must be based on a connection between policy and qualitative findings. Similarly, Team UK presented an evaluative inquiry of shifting AI controversies so that we stop treating AI as Pandora’s box. Hence, they emphasized that there is a need for public literacy around AI so that AI controversy actually starts to appear less impossible to solve.

Finally, Team France in their effort to rethink the AI controversies posed a question: How can AI be rethought differently? They pointed out that the hype and fear around AI could be lessened by making the debates and narratives around AI more socio-centric by shifting the discourse of AI controversy to other facets of life that are more socially relatable, easily understood, and transparent.

As a whole, the panel saw a rich discussion on some of the timely issues facing AI such as the ‘trap of newness’, ‘promises and perils of AI’, and ‘regulation inhibits innovation’. In doing so, the research collaborators and audiences agreed that there is a need for regulation and clarity on how AI regulations are being designed at the policy level, what the stakes of AI are, and most importantly the kind of roles each actor plays – all of which hint that there is a need for a re-infrastructuring of AI systems that are more attuned to social engineering.

In sum, the key panel highlighted the controversiality of AI and not about controversies of AI.

By Amrita Gurung, PhD student in Social and Cultural Analysis

Photo credit: Peter Morgan